During the time we have spent in this field, our company has developed hundreds of site parsers. It's no secret that many websites restrict access to reading information: a limited number of requests over a given time period, limiting access based on IP addresses, a sophisticated page layout as well as making regular changes to the website's structure. The developers have many counter-measures in their arsenal, including the use of proxy servers, as well as heuristic and adaptive algorithms. We usually code such projects on a time-based payment scheme for the reasons described above.

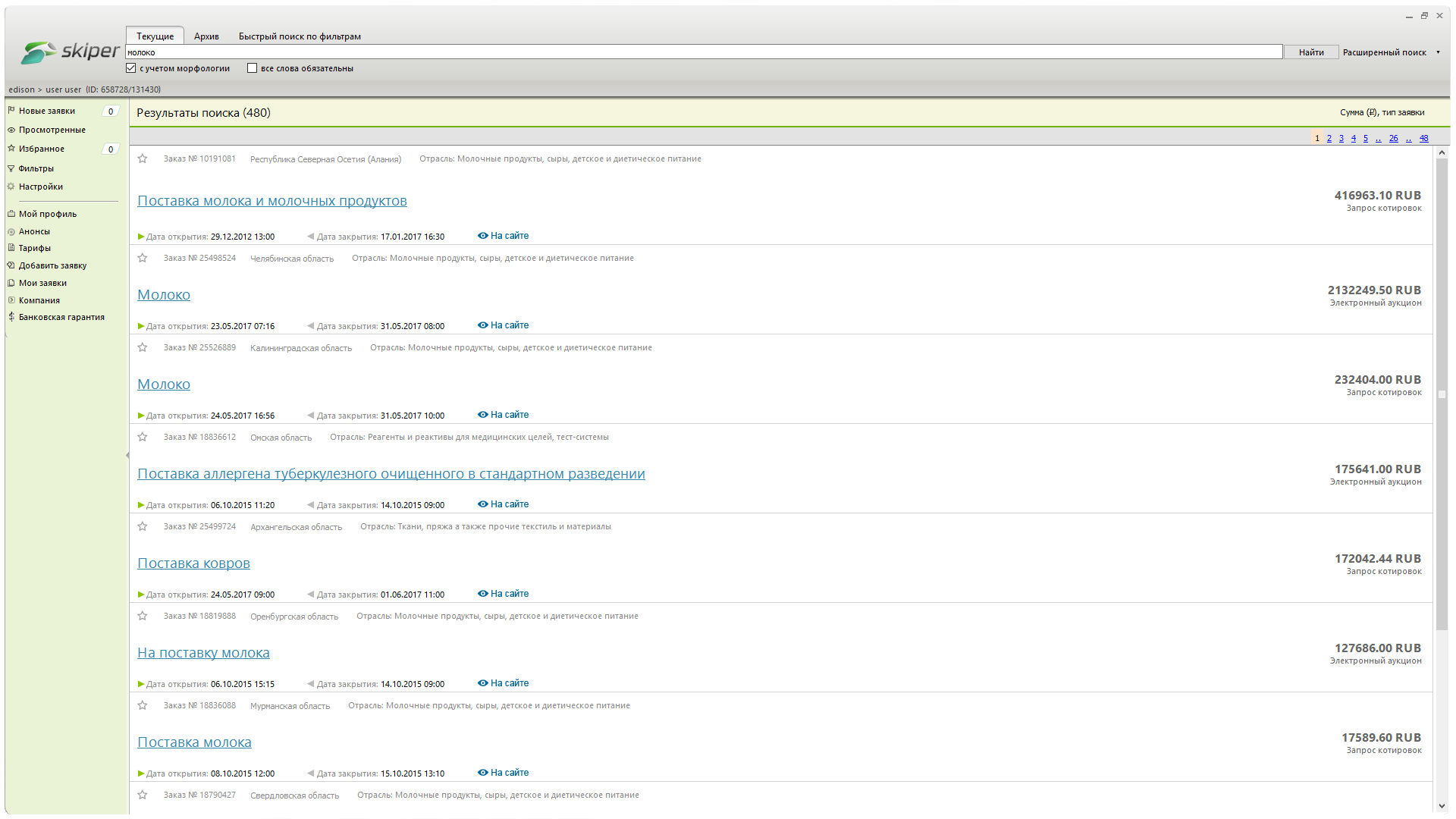

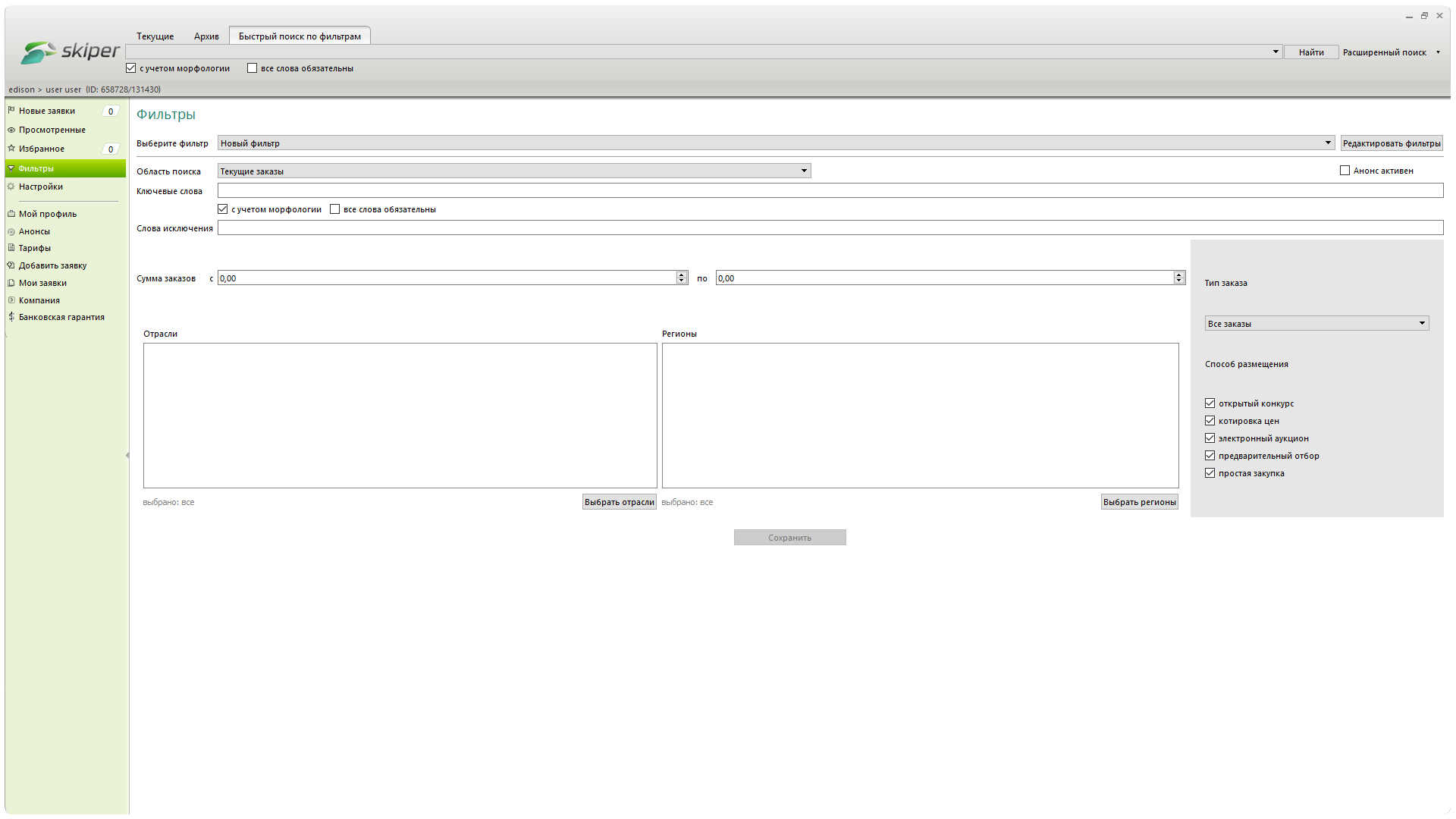

Tenders and information aggregators

May 14, 2015

2002–2026

2002–2026